TealFlow

Clinical Trial Apps in Hours, not Weeks

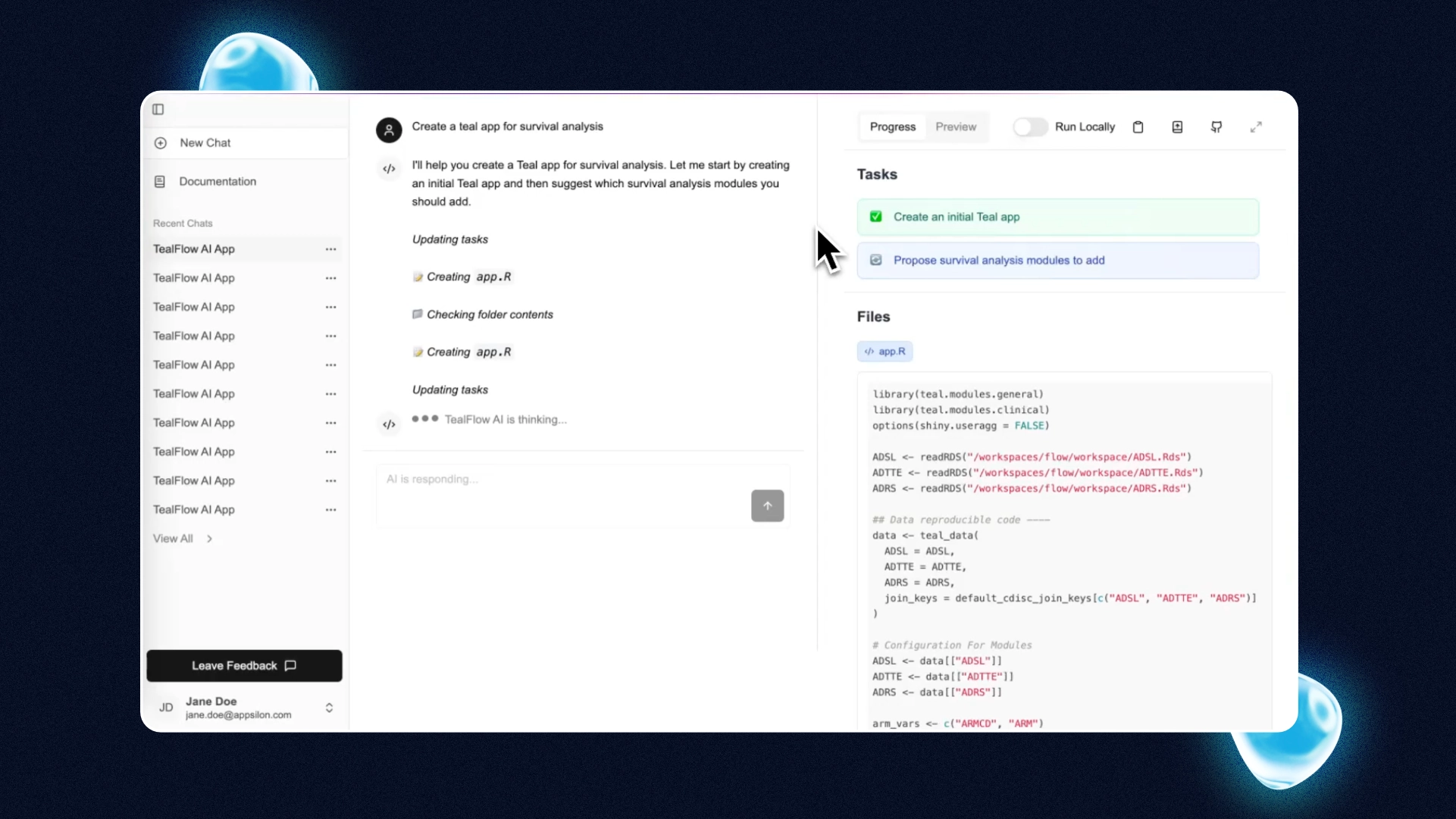

TealFlow combines trusted {teal} modules with AI to generate interactive, validated clinical trial analysis apps in minutes - no coding required. Get a working prototype instantly.

The Fastest Path to Clinical Trial Apps

See TealFlow in Action - watch how an app is built in minutes.

Chat-based creation

Build apps with simple dialogue.

Rapid prototyping

From idea to a POC in minutes and a full app within hours.

Human-in-the-loop

Ensures quality and compliance.

No AI hallucinations

Only configures trusted {teal} modules

Legacy systems are slow, complex and exclusive.

Clinical research teams are drowning. Traditional clinical app development takes several months, requires deep coding expertise, and leaves non-programmers waiting on dev teams. Backlogs grow, filings are delayed, and valuable insights sit idle instead of guiding decisions. The result? Teams lose speed, efficiency, and competitive edge.

Use Cases for TealFlow

Value across every level of your clinical data organization.

For Business Users

Prototype apps independently via natural language commands—no coding required.

Create insights in minutes, not weeks

For Developers

Generate clean, review-ready code.

Skip repetitive setup; access production-ready code that’s immediately deployable.

For Leadership

Accelerate filings, reduce timelines, and unlock operational efficiency. Empower business users to generate insights directly, reducing developer bottlenecks.

How It Feels to Work with Us

Andrea Nicolaysen Carlsson

Technology Manager Electrodes at Elkem ASA

Director

at Top10 Pharma Company

Director

at Top5 Pharma Company

Let’s Build Your Data Science Environment

FAQs About SCE

Answers to the most common questions about data platforms, cloud solutions, and infrastructure best practices.

Why Is Data Governance Critical for Enterprise Success?

Data governance ensures data quality, security, and regulatory compliance, enabling organizations to make accurate decisions and maintain trust in their data assets.

What Is Infrastructure as Code (IaC)?

Infrastructure as Code is the process of managing and provisioning IT infrastructure using code instead of manual configuration. It allows for automation, version control, and scalability, ensuring consistent and repeatable infrastructure setups.

How Can I Optimize Costs in Data Science Projects?

Cost optimization involves right-sizing your infrastructure, using cloud cost-management tools, automating workflows, and leveraging serverless or spot instances to reduce idle resource usage and improve efficiency.

What Is a Scientific Computing Environment (SCE) and Why Is It Important?

A Scientific Computing Environment (SCE) is a specialized infrastructure for managing complex data analysis and modeling, particularly in data-intensive industries like life sciences and pharma.

How Can CI/CD Pipelines Enhance Data Project Efficiency?

CI/CD pipelines automate code integration and deployment, reducing manual errors, improving delivery speed, and ensuring consistent updates for data-driven applications.

What Is Data Orchestration, and How Does It Benefit Enterprises?

Data orchestration automates the movement and transformation of data across systems, improving accessibility, consistency, and readiness for analytics.